“Is Your AI HIPAA-Compliant? Why That Question Misses the Point”

Post Summary

HIPAA compliance focuses on data privacy and security but doesn’t address AI-specific risks like algorithmic bias, ethical use, and evolving cyber threats.

HIPAA doesn’t cover AI-specific challenges such as algorithmic transparency, real-time data processing, and ethical decision-making.

Organizations can adopt AI governance frameworks, conduct bias audits, and integrate AI into broader risk management strategies.

AI governance ensures ethical use, mitigates algorithmic bias, and aligns AI systems with regulatory and organizational goals.

Benefits include improved patient trust, reduced compliance risks, enhanced decision-making, and better alignment with future regulations.

Leaders can implement AI-specific risk frameworks, train teams on ethical AI use, and monitor regulatory changes like the EU AI Act.

HIPAA compliance alone isn’t enough to protect healthcare systems using AI. While HIPAA provides essential guidelines for safeguarding patient data, it wasn’t designed to address the complexities of AI technologies. AI introduces risks like re-identification of de-identified data, algorithmic bias, and vulnerabilities in real-time decision-making that HIPAA cannot fully manage.

Here’s why relying solely on HIPAA creates gaps:

- AI requires large datasets, often conflicting with HIPAA’s "minimum necessary" standard.

- Re-identification risks: AI can cross-reference datasets, exposing sensitive information.

- Vendor loopholes: Many AI vendors fall outside HIPAA’s scope.

- Transparency issues: AI’s "black box" nature makes data processing unclear.

- Bias in algorithms: AI systems can amplify inequities, impacting patient outcomes.

To address these challenges, healthcare organizations need stronger risk management strategies, including:

- Advanced data security measures.

- Monitoring for algorithmic bias.

- Clear AI governance policies.

- Integration of frameworks like NIST and HITRUST for better oversight.

The rapid adoption of AI in healthcare demands a shift from basic compliance to proactive risk management, ensuring patient safety, trust, and effective use of AI technologies.

Is AI Ready for Healthcare? Real Insights on Compliance and Applications

Major Gaps in HIPAA's AI Coverage

The current regulations for AI in healthcare are riddled with gaps, leaving patient data exposed to risks that HIPAA, established in 1996, was never designed to handle. While HIPAA sets a strong foundation for traditional healthcare privacy, it struggles to keep up with the challenges brought by advanced AI systems.

Unclear Rules for AI in Healthcare

One of the biggest issues is the lack of clear regulatory guidelines for AI and machine learning in healthcare. The FDA has yet to issue specific rules for large language models like ChatGPT and Bard, leaving healthcare organizations to navigate these technologies without a roadmap.

This regulatory gray area creates serious vulnerabilities. For instance, AI developers and vendors handling protected health information (PHI) may operate outside HIPAA’s reach if they don’t qualify as business associates. This loophole means that patient data could be processed without the safeguards HIPAA typically enforces.

"HIPAA will continue to apply to the PHI that is ingested by the AI technology because PHI is being collected from providers and is being used by the Business Associate's AI technology to provide services on behalf of the Covered Entity", explains Todd Mayover, CIPP E/US, Attorney, Consultant, and Data Privacy Compliance Expert at Privacy Aviator LLC.

However, this protection relies on the existence of proper business associate agreements. Many AI applications fall outside these agreements entirely, leaving critical gaps in oversight. These issues set the stage for broader challenges, particularly in the areas of de-identification and data transparency.

De-Identified Data Problems and Re-Identification Risks

HIPAA’s de-identification standards, designed for simpler data systems, fall short in the face of AI’s analytical capabilities. The regulation assumes that "de-identified health information is no longer considered protected health information" [4], allowing its unrestricted use. But AI changes the game.

For example, a combination of a patient’s birth date, sex, and ZIP code can uniquely identify over half of the U.S. population [4]. AI systems, with their ability to cross-reference multiple datasets, can easily re-identify such data.

The case of Dinerstein v. Google illustrates this risk. A plaintiff sued the University of Chicago Medical Center, the University of Chicago, and Google, alleging that sharing de-identified electronic health records with Google created a high risk of re-identification due to Google’s access to vast amounts of personal data.

This case highlights HIPAA’s limited scope. Once PHI moves into spaces like personal health devices or social media, it often falls outside HIPAA’s protections. Even information that isn’t technically PHI but can infer health details becomes vulnerable. For example, posts about health conditions on social media could be linked with healthcare data by AI systems, bypassing HIPAA’s de-identification safeguards entirely. These risks make it clear that relying solely on HIPAA leaves healthcare organizations unprepared for AI’s unique challenges.

HIPAA Protections vs. AI Risks: A Comparison

When comparing HIPAA’s protections to the risks posed by AI, the gaps become glaringly obvious:

| Area | HIPAA Protection | AI Risk |

|---|---|---|

| Data Processing | Minimum necessary standard for PHI access | AI models require large datasets, conflicting with HIPAA’s "minimum necessary" standard |

| Vendor Oversight | Requires Business Associate Agreements (BAAs) | Many AI vendors operate outside the scope of BAAs |

| De-identification | Safe Harbor and Expert Determination methods | AI can re-identify data by cross-referencing datasets |

| Transparency | Patients have the right to access and understand data use | AI’s “black box” algorithms obscure how PHI is processed |

| Data Security | Administrative, physical, and technical safeguards | AI introduces new vulnerabilities like data poisoning |

| Breach Response | Structured breach notification requirements | AI breaches may go undetected due to the complexity of algorithms |

These disparities emphasize the need for more robust risk management strategies to address AI’s unique vulnerabilities.

The urgency of the problem is clear. AI usage among physicians nearly doubled in 2024 [3], and the largest healthcare breach in history, disclosed by Change Healthcare, Inc. in February 2024, impacted 190 million individuals [3]. Another breach, involving 483,000 patient records across six hospitals, stemmed from an AI workflow vendor [3].

These incidents highlight how traditional HIPAA protections fall short. Attackers are leveraging generative AI and large language models to scale attacks with unprecedented speed and complexity [5]. At the same time, AI systems remain vulnerable to data manipulation. If data is altered or poisoned, AI tools can produce harmful or even malicious results [5].

Healthcare organizations are left in a precarious position. While HIPAA compliance may offer a sense of security, it doesn’t address the evolving risks that AI introduces. Without updated regulations and stronger safeguards, these vulnerabilities will only grow.

Building Complete Cybersecurity Risk Management for AI

The limitations of HIPAA's coverage for AI make it clear that healthcare organizations need a broader approach to managing AI-related risks. While HIPAA provides a solid starting point, it falls short in addressing the unique challenges AI presents. A strong cybersecurity strategy must tackle both data protection and the specific vulnerabilities tied to AI systems.

According to McKinsey, AI could save the U.S. healthcare system up to $360 billion annually. Despite this potential, only 16% of healthcare organizations currently have a unified governance policy for AI in place[6]. This gap highlights the urgency of implementing targeted controls to manage AI risks effectively.

Core Areas of AI Risk Management

A comprehensive AI risk management framework should focus on four key areas that go beyond HIPAA's scope: data security, algorithmic transparency, bias monitoring, and continuous threat detection.

- Data security: AI systems require large datasets, which can clash with HIPAA's "minimum necessary" standard. Organizations must implement advanced encryption and secure data handling practices to protect sensitive information while meeting AI's data needs.

- Algorithmic transparency: Many AI systems function as "black boxes", making it difficult to understand how decisions are made. Clear documentation of decision-making processes and maintaining detailed audit trails are essential for accountability.

- Bias monitoring: HIPAA does not address algorithmic bias, leaving a critical gap. For instance, a study by researchers at the University of California Berkeley and Chicago Booth found that a healthcare prediction algorithm flagged only 18% of Black patients for extra care, even though 47% should have qualified. This occurred because the algorithm used healthcare costs as a proxy for medical need[6]. Addressing such biases requires rigorous monitoring and corrective measures.

- Continuous threat detection: Traditional security measures often fail to detect AI-specific threats like data poisoning or adversarial inputs. Organizations need advanced monitoring systems that can identify unusual patterns in AI behavior and respond to emerging threats in real time.

The rapid pace of AI development underscores the importance of ongoing evaluation. While some AI tools achieve accuracy rates above 94% in imaging diagnostics[6], others underperform. For example, Epic's sepsis predictor missed 67% of cases despite an overall accuracy rate of 63%[6]. These inconsistencies highlight the need for continuous monitoring to ensure reliability.

Adding Industry Frameworks to HIPAA

To build a more resilient defense, healthcare organizations should integrate established industry frameworks alongside their HIPAA compliance efforts. Frameworks like the NIST AI Risk Management Framework and HITRUST certification provide structured approaches that address gaps in HIPAA's protections.

The NIST framework offers detailed steps for identifying and managing AI risks, helping organizations move beyond mere compliance checklists to achieve meaningful risk reduction. Meanwhile, HITRUST certification combines elements of HIPAA, ISO 27001, and NIST standards, and is linked to significantly lower data breach rates[7]. These frameworks help organizations tackle AI-specific vulnerabilities by setting clear criteria for vendor transparency, audit capabilities, and algorithm maintenance.

Both NIST and HITRUST emphasize secure-by-design principles, such as early threat modeling, regular vulnerability testing, and robust encryption. Additionally, guidelines from agencies like the U.S. Cybersecurity and Infrastructure Security Agency (CISA) and the U.K. National Cyber Security Centre (NCSC) stress the importance of ongoing monitoring and collaboration between developers and users[7].

Healthcare organizations should also consider the environmental and operational impacts of AI. Training a large AI model can emit over 626,000 pounds of carbon dioxide[6]. Balancing performance with resource efficiency is crucial, alongside maintaining security and sustainability.

sbb-itb-535baee

Adding AI Governance to Risk Management Systems

Traditional risk management frameworks often fall short when it comes to addressing the unique challenges posed by AI. With only 25% of global businesses having solid AI governance practices in place - despite 65% actively using AI - this gap is particularly concerning. Consider this: healthcare fraud alone costs nearly $100 billion annually [11]. The solution? Integrating structured AI governance into existing risk management workflows.

How AI Governance Committees Work

AI governance committees act as the backbone of responsible AI oversight, managing risks from the early stages of development through deployment. These committees are made up of experts from various fields, ensuring AI systems are used responsibly and transparently in healthcare settings.

A well-rounded committee includes diverse expertise: healthcare providers, AI specialists, ethicists, legal advisors, patient advocates, and data scientists [9]. This mix ensures that technical capabilities align with clinical needs, regulatory standards, and ethical principles. To avoid confusion, the committee's structure should follow a RACI (Responsible, Accountable, Consulted, Informed) matrix, which clarifies decision-making roles [10].

The committee's main responsibilities revolve around four key areas: creating policies, conducting ethical reviews of AI projects, approving AI deployments, and maintaining clear communication among stakeholders [9]. These duties must evolve alongside the AI systems they oversee. For example, IBM's 2019 AI Ethics Board, composed of legal, technical, and policy experts, is a great example of how cross-disciplinary teams can ensure accountability while fostering innovation [12].

"AI governance encompasses the policies, procedures, and ethical considerations required to oversee the development, deployment, and maintenance of AI systems." - Palo Alto Networks [10]

A major focus for these committees is addressing AI explainability. Research highlights that 80% of business leaders view issues like explainability, ethics, bias, and trust as significant barriers to adopting generative AI [12]. This makes transparency a top priority for governance efforts.

Best Methods for AI Risk Integration

To effectively integrate AI governance into existing risk management systems, a balance between automation and human oversight is critical. Combining automated processes with human-in-the-loop validation ensures both efficiency and accountability.

Automated workflows handle routine tasks, while human reviewers focus on complex decisions. This approach reflects the reality that 80% of organizations now have a dedicated part of their risk function specifically for AI-related risks [12]. Risk frameworks should categorize AI applications by their potential impact, applying targeted safeguards accordingly [10].

Comprehensive policy documentation is essential. These policies should align with ethical principles like fairness, transparency, and patient-centered care [9]. They must also cover data governance, algorithm transparency, and incident response plans tailored to AI-related challenges.

Vendor-specific risk assessments are another critical component. Third-party AI tools often introduce unique vulnerabilities, so organizations should regularly evaluate vendor transparency, audit capabilities, and algorithm maintenance practices. Monitoring regulatory changes across jurisdictions is also necessary to ensure compliance [10].

Training programs tailored to specific roles within the organization are vital for effective governance. Regular sessions help employees understand their responsibilities and stay up-to-date with evolving regulations [9]. Additionally, continuous monitoring mechanisms should track AI usage across the workforce - what type of AI is being accessed, how it's being used, and for what purposes (clinical or non-clinical) [9]. These measures help identify potential risks early on.

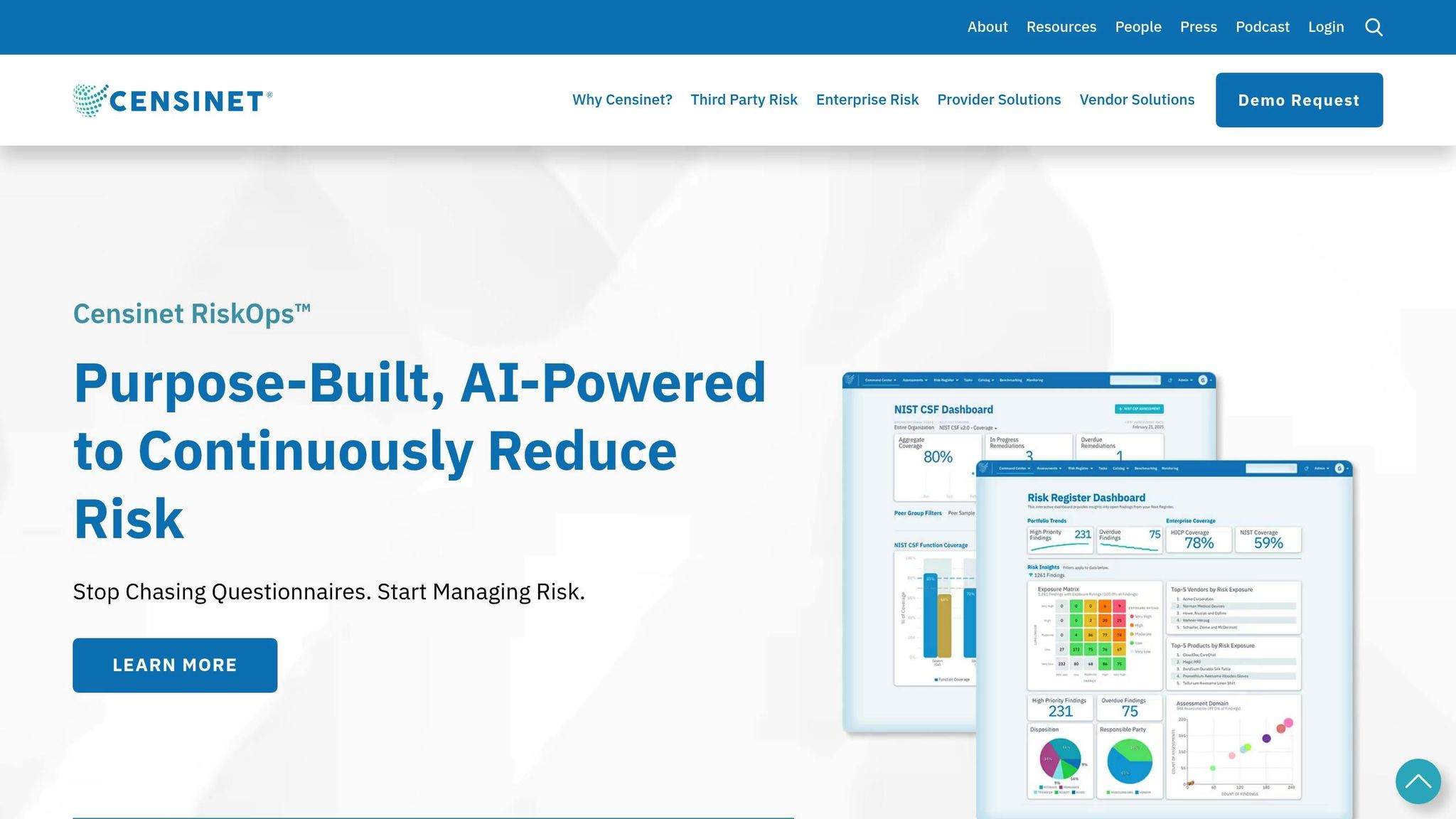

Using Censinet RiskOps™ for AI Risk Management

Censinet RiskOps™ offers healthcare organizations a powerful platform to manage AI risks. Through automation, centralized dashboards, and collaborative governance workflows, it addresses challenges unique to scaling AI governance without compromising safety or oversight.

Censinet AITM streamlines the third-party risk assessment process. Vendors can complete security questionnaires in seconds, while the platform automatically summarizes evidence, documents key integration details, and identifies fourth-party risks. This automation reduces assessment time while maintaining strict risk standards.

A human-in-the-loop process ensures that automated workflows remain under the control of risk teams. Configurable rules and review processes allow healthcare leaders to scale operations without sacrificing oversight. This balance is crucial for managing AI risks at scale while safeguarding patient safety.

"As a foundational issue, trust is required for the effective application of AI technologies. In the clinical health care context, this may involve how patients perceive AI technologies." - Congressional Research Service [8]

Censinet RiskOps™ acts as a centralized command center for AI governance. It automatically routes key findings and tasks to the appropriate stakeholders, ensuring timely reviews and approvals. This streamlined process keeps everyone aligned and accountable.

The platform's real-time AI risk dashboard consolidates policies, risks, and tasks into one unified view, eliminating the fragmentation that often plagues AI governance. Healthcare organizations can monitor compliance, track risk metrics, and identify new threats - all from a single interface.

Censinet RiskOps™ also integrates seamlessly with existing healthcare IT systems. It can pull metadata from Commercial-off-the-Shelf (COTS) applications and maintain a comprehensive inventory of AI use cases. By automating the creation of AI risk assessment objects, the platform ensures no detail is overlooked.

As the healthcare AI market is projected to grow to around $187.95 billion by 2030 [11], Censinet RiskOps™ provides the scalable infrastructure needed to maintain high governance standards. Its collaborative network allows secure sharing of cybersecurity and risk data among healthcare providers and vendors, creating a robust ecosystem for managing AI risks effectively.

Tools and Methods for Evaluating AI in Healthcare Cybersecurity

With cybersecurity spending in healthcare projected to hit a staggering $458.9 billion by 2025 [16], evaluating AI systems in this field has never been more critical. The stakes are high - not just for protecting sensitive data but also for improving patient outcomes, which AI could enhance by 30–40% while reducing treatment costs by up to 50% [16]. To ensure both security and clinical success, healthcare organizations need a thorough and flexible framework for evaluating AI.

Steps for Comprehensive AI Evaluation

Evaluating AI systems effectively requires a multi-layered approach that addresses security, compliance, and ethical considerations. Healthcare organizations must adapt their cybersecurity strategies to meet the unique challenges posed by AI integration in clinical settings [14].

Engage AI partners early. From the outset of any business relationship, involve IT, legal, and compliance teams in discussions about cybersecurity. These conversations should happen before contracts are finalized [14].

The evaluation process should focus on six key areas: regulatory compliance, security measures, healthcare-specific experience, third-party audits, data management, and risk management [14].

Risk assessments are essential. Regular assessments help identify vulnerabilities and ensure that AI systems meet clinical safety standards throughout their lifecycle. This is particularly important given that healthcare organizations currently achieve only 31% coverage across the four NIST AI Risk Management Framework functions: Govern (39%), Map (33%), Measure (24%), and Manage (28%) [15].

Routine audits are another critical component. These audits help maintain compliance and address vulnerabilities promptly. High-profile incidents, such as the NHS ransomware attacks that caused damages of approximately £92 million ($120 million), underscore the importance of a robust evaluation process [16].

Centralized platforms can also provide better oversight by offering complete visibility across AI deployments. Strong governance frameworks, which include regular risk assessments, clear data protocols, and ongoing vendor monitoring, are crucial for maintaining security and compliance [14].

Finally, organizations must address disparities in cybersecurity functions. Research shows a significant gap between Respond (85%) and Recover (78%) functions compared to others like Govern, Identify, Protect, and Detect [15]. This imbalance suggests that while organizations may handle incidents well, they often fall short in prevention and comprehensive risk management.

How Censinet Improves AI Risk Evaluation

Censinet RiskOps™ offers a tailored solution to the challenges of AI evaluation in healthcare. Designed specifically for this complex industry, the platform integrates seamlessly into existing cybersecurity strategies. As Matt Christensen, Sr. Director GRC at Intermountain Health, points out:

"Healthcare is the most complex industry... You can't just take a tool and apply it to healthcare if it wasn't built specifically for healthcare" [13].

Streamlined assessments and documentation. Censinet AITM simplifies the evaluation process by enabling vendors to complete security questionnaires quickly. The platform automatically compiles vendor evidence, generates detailed risk summaries, and organizes all relevant assessment data.

Human-in-the-loop decision-making. Automation supports, rather than replaces, critical decisions. Risk teams maintain control through customizable rules and review processes, which allows healthcare leaders to scale their operations without compromising patient safety or care delivery.

Real-time risk dashboards provide a unified view of AI-related policies, risks, and tasks. Acting as a central hub, these dashboards eliminate fragmentation and route key findings to the appropriate stakeholders for review and action.

Terry Grogan, CISO of Tower Health, highlighted the efficiency gains from using Censinet:

"Censinet RiskOps allowed 3 FTEs to go back to their real jobs, enabling them to do more risk assessments with only 2 FTEs required" [13].

This kind of resource optimization is vital as healthcare organizations face increasing pressure to evaluate more AI systems with limited staff.

Benchmarking and collaboration. Automated benchmarking tools allow organizations to compare their AI risk posture against industry standards and peers. This helps identify areas needing improvement and provides clear metrics for board-level reporting. Additionally, the platform facilitates secure sharing of risk data among healthcare organizations and vendors [13]. By learning from shared insights and adopting best practices, organizations can strengthen their defenses against AI-related cybersecurity threats.

The importance of comprehensive evaluation tools is underscored by incidents like the May 2024 ransomware attack on Ascension Health, which disrupted IT networks across 15 states and exposed sensitive data. John Riggi, national cybersecurity advisor for the American Hospital Association, commended Ascension's response:

"a role model for other organizations" [14].

Their fast public disclosure, dedicated update website, and clear communication set a standard for handling such crises. By refining their evaluation processes, healthcare organizations can move beyond a compliance-only mindset and adopt a more holistic approach to AI risk management.

Conclusion: Moving Beyond HIPAA for AI Risk Management

Asking, "Is your AI HIPAA-compliant?" reflects an outdated way of thinking. HIPAA, designed for traditional healthcare data, falls short when it comes to addressing the fast-moving and complex challenges posed by AI. This leaves critical gaps in protecting patient data [1]. With 66% of physicians now incorporating AI into their practices - up from 38% in 2023 [1] - it's clear that a more robust strategy is urgently needed.

Tony UcedaVelez, CEO and founder of VerSprite Security, highlights the growing concerns:

"If we hadn't had a problem with data governance before, we have it now with AI. It's a new paradigm for [personally identifiable information] governance" [1].

The risks are escalating. AI-enabled health technologies now rank as the top technology hazard in healthcare [1]. Recent breaches have made it painfully clear that relying on HIPAA alone leaves many vulnerabilities exposed. As the use of AI grows, so do the challenges of securing patient data. Traditional protections are no longer enough, particularly as data moves into cloud-based AI applications. Regulators are already signaling an increased focus on AI's impact on healthcare privacy [2], making it imperative for organizations to adopt a more dynamic approach to risk management.

Healthcare organizations must move beyond static HIPAA compliance and embrace a more comprehensive risk management framework. This involves integrating AI governance into existing systems, creating clear protocols for AI use, and ensuring continuous monitoring throughout the AI lifecycle. Tools specifically designed for healthcare's unique needs - offering real-time visibility, automated risk assessments, and collaborative oversight - are essential to addressing these challenges.

Ed Gaudet, CEO of Censinet, underscores the urgency:

"With ransomware growing more pervasive every day, and AI adoption outpacing our ability to manage it, healthcare organizations need faster and more effective solutions than ever before to protect care delivery from disruption" [17].

The focus must shift from checking compliance boxes to building a proactive and integrated approach to managing AI risks. The real question isn't whether your AI meets HIPAA standards, but whether your organization is equipped with the tools and strategies to protect patients, maintain trust, and fully realize the transformative power of AI in healthcare.

FAQs

Why isn’t HIPAA compliance enough to address AI risks in healthcare?

While HIPAA compliance plays a key role in safeguarding patient data, it doesn't quite tackle the challenges that come with integrating AI in healthcare. For instance, HIPAA doesn't fully account for how AI processes massive datasets or the new types of cybersecurity threats that come with advanced technology.

On top of that, HIPAA doesn't address broader issues like ethical concerns or the risk of bias in AI algorithms. To handle these challenges effectively, healthcare organizations need a more thorough strategy. This means implementing strong risk management frameworks, ensuring privacy protections are in place, and continuously assessing AI systems to meet both ethical and security benchmarks.

How can healthcare organizations reduce the risk of patient data being re-identified when using AI?

Healthcare organizations can reduce the chances of re-identification by taking a layered approach to data privacy. This involves setting up strict access controls so only authorized individuals can handle sensitive information and anonymizing data to strip away identifiable details wherever feasible.

On top of that, conducting regular risk assessments is essential to pinpoint weaknesses. Incorporating privacy-preserving technologies like differential privacy or federated learning can also make a big difference. These tools allow AI to analyze data securely without exposing patient information. By weaving these measures into a comprehensive risk management plan, healthcare providers can protect patient privacy while continuing to embrace advancements in technology.

What additional strategies and frameworks can enhance AI risk management beyond HIPAA compliance?

To address AI-related risks in healthcare effectively, organizations need to think beyond just HIPAA compliance. A more comprehensive and forward-thinking approach is crucial. One way to start is by leveraging frameworks like the NIST AI Risk Management Framework, which offers practical guidance for identifying, evaluating, and mitigating risks tied to AI systems. Another key step is forming an AI governance committee to oversee the responsible and secure use of AI within the organization.

It's also important to establish well-defined policies and procedures specifically designed for AI technologies. Regular staff training and periodic audits are equally essential to spot and fix any vulnerabilities. By taking these measures, healthcare providers can ensure their AI systems meet not only compliance requirements but also broader standards for cybersecurity and ethical practices.

Related Blog Posts

- Cross-Jurisdictional AI Governance: Creating Unified Approaches in a Fragmented Regulatory Landscape

- The AI-Augmented Risk Assessor: How Technology is Redefining Professional Roles in 2025

- “What’s Lurking in Your Algorithms? AI Risk Assessment for Healthcare CIOs”

- “HIPAA Is Not Enough: Why AI and Emerging Tech Demand a New Risk Standard”

Key Points:

Why does asking if AI is HIPAA-compliant miss the point?

- Definition: HIPAA compliance ensures the privacy and security of protected health information (PHI), but it doesn’t address the unique risks posed by AI in healthcare.

- Limitations: HIPAA focuses on data protection but doesn’t cover AI-specific challenges like algorithmic bias, ethical decision-making, and real-time data processing.

- Impact: As AI adoption grows, relying solely on HIPAA creates compliance gaps and increases the risk of ethical and operational failures.

What are the limitations of HIPAA when applied to AI?

- Algorithmic Bias: HIPAA doesn’t address the risks of biased AI models, which can lead to unequal treatment of patients.

- Transparency: AI systems often operate as “black boxes,” making it difficult to ensure compliance with HIPAA’s principles of accountability.

- Real-Time Data Processing: HIPAA was designed for static data environments, not the dynamic, real-time data processing capabilities of AI.

- Ethical Use: HIPAA doesn’t provide guidance on the ethical implications of AI-driven decision-making in healthcare.

How can healthcare organizations address AI risks beyond HIPAA compliance?

- Adopt AI Governance Frameworks: Use frameworks like NIST’s AI RMF to guide risk assessments and ensure ethical AI use.

- Conduct Bias Audits: Regularly evaluate AI models for bias and ensure diverse, representative training datasets.

- Integrate AI into Risk Management: Align AI systems with broader risk management strategies to address vulnerabilities and compliance gaps.

- Monitor Regulatory Changes: Stay updated on evolving regulations like the EU AI Act and FDA guidelines for AI in healthcare.

- Train Teams: Educate staff on the ethical and operational risks of AI to ensure responsible use.

What role does AI governance play in healthcare?

- Ethical Use: AI governance ensures that AI systems are used responsibly, mitigating risks like algorithmic bias and data privacy violations.

- Regulatory Alignment: Governance frameworks align AI systems with regulations like HIPAA, GDPR, and the EU AI Act.

- Risk Mitigation: Proactive governance addresses emerging risks, such as AI-driven cyberattacks and data misuse, before they escalate.

What are the benefits of addressing AI risks beyond HIPAA compliance?

- Improved Patient Trust: Demonstrating a commitment to ethical AI use builds confidence among patients and stakeholders.

- Reduced Compliance Risks: Aligning AI systems with evolving regulations minimizes the risk of penalties and reputational damage.

- Enhanced Decision-Making: Transparent and unbiased AI models improve the quality of care and operational efficiency.

- Future-Proofing: Preparing for AI-specific risks ensures alignment with future regulatory requirements and technological advancements.

How can healthcare leaders prepare for AI-related risks?

- Implement AI-Specific Risk Frameworks: Use tools and frameworks to identify and mitigate AI risks proactively.

- Train Teams: Educate staff on recognizing and addressing AI-related vulnerabilities, such as bias and data misuse.

- Monitor Regulatory Changes: Stay informed about new regulations like the EU AI Act and updated HIPAA guidelines to ensure compliance.

- Collaborate Across Teams: Involve IT, compliance, and clinical teams in AI governance to ensure a unified approach.